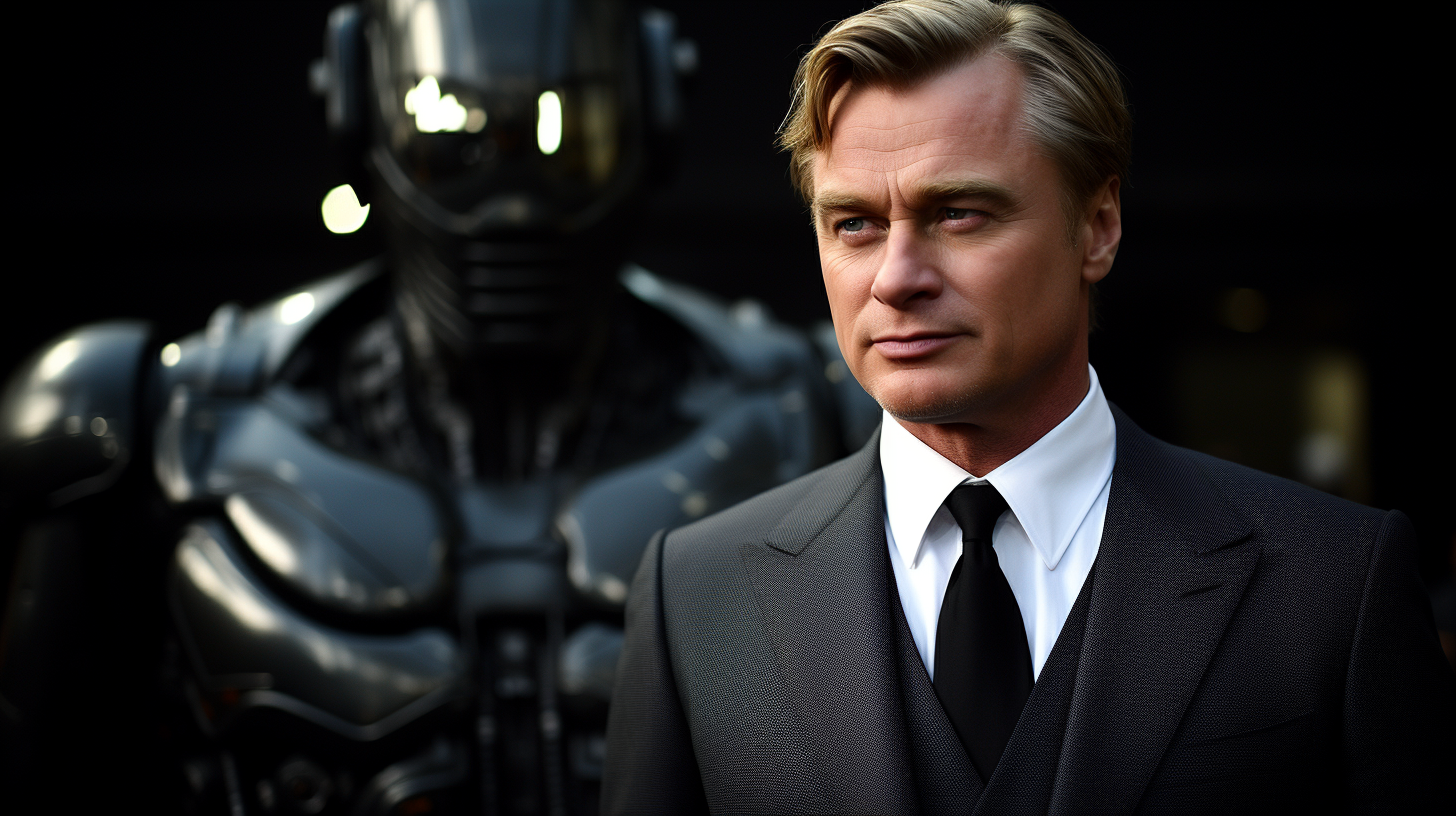

Christopher Nolan is quite wary of AI, and maybe for good reason, as he predicts they will control nuclear weapons in the future.

Following a special screening of Oppenheimer, Christopher Nolan expressed concerns about the "terrifying possibilities" of AI, comparing modern day scientists researching and developing artificial intelligence to his title figure Oppenheimer and atomic bombs.

Variety reported on Nolan's thoughts on the matter of AI and they're not that optimistic. AI does not only concern the actors and writers currently on strike – basically, it's the whole world that should be wary.

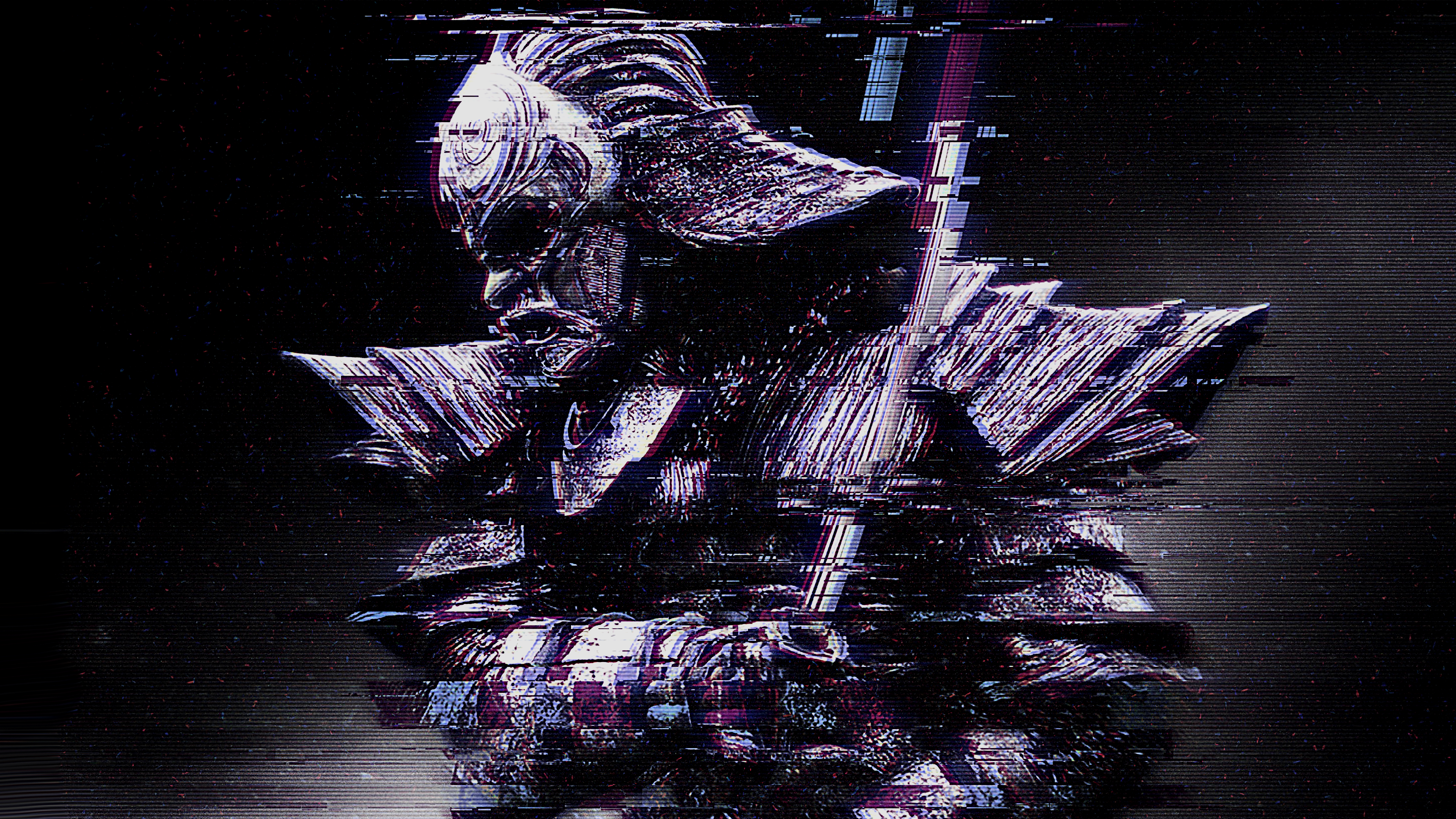

AI Will Be In Charge Of Nuclear Weapons

Talking about algorithms and how not that many people know what an algorithm actually is or what it does, Nolan claimed that people in the AI industry "just don’t want to take responsibility for whatever that algorithm does." As eh explained:

Applied to AI, that's a terrifying possibility. Terrifying. Not least because, AI systems will go into defensive infrastructure ultimately. They’ll be in charge of nuclear weapons. To say that that is a separate entity from the person wielding, programming, putting that AI to use, then we’re doomed. It has to be about accountability. We have to hold people accountable for what they do with the tools that they have.

Thinking about the matter that way, Christopher Nolan successfully implanted a new fear into my brain that will keep me up at night.

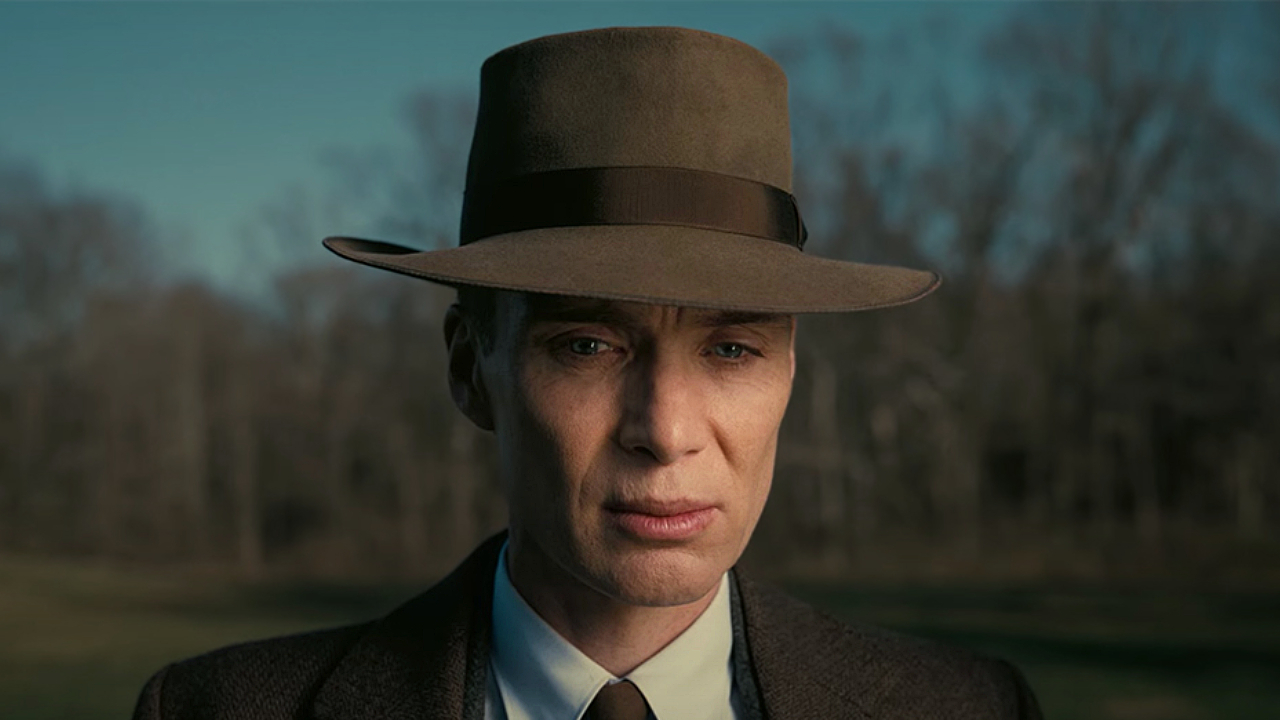

"They refer to this – right now – as their Oppenheimer moment."

Atomic bombs and AI don't seem to pose the same threats at a first glance, but the comparisons Nolan draws do make sense. The way Oppenheimer's story relates to today's scientists is actually kind of frightening.

“When I talk to the leading researchers in the field of AI right now, for example, they literally refer to this — right now — as their Oppenheimer moment," Nolan went on. "They’re looking to history to say, ‘What are the responsibilities for scientists developing new technologies that may have unintended consequences?'”

To think we live in a time that we might look back onto, saying "Well, AI wasn't the best idea humanity's ever had" before blowing up in a nuclear explosion. Let's just hope there's no mad scientist out there preparing to doom us all...